How to Build an AI Agent for Your SaaS

To build a truly effective AI agent, you need to go beyond simply plugging into a Large Language Model (LLM). The real magic happens when you give that LLM a framework to reason, plan, and execute tasks. It's about building a program that can make its own decisions and use various tools to hit a specific goal.

Think of it this way: the LLM is the brilliant brain, but it needs arms and legs to interact with the world.

Your Conceptual Map to Building an AI Agent

Before you write a single line of code, it’s essential to have a solid mental model of how these systems actually work. This isn't just about memorizing the latest jargon; it's about understanding the fundamental architecture. When you get this right, every technical choice you make—from the framework you select to the APIs you integrate—becomes more strategic and purposeful, especially in a B2B SaaS environment.

The first step is appreciating the jump from basic automation to what we call agentic AI. They are worlds apart.

- Simple Automation: This is the old way. It’s task-driven and follows a rigid, pre-written script. A classic example is a workflow that fires off a generic email whenever a new user signs up. It follows orders, but it doesn’t think.

- Agentic AI: This is goal-driven and dynamic. Instead of a script, you give the agent a high-level objective, like "onboard new user X." It then figures out the best way to do that—maybe by verifying their email, creating a personalized workspace, and then sending a genuinely helpful welcome message.

This difference is critical. You're not just programming a sequence of if-then statements; you're designing a system that can reason its way through a problem. It’s a huge leap from the rule-based "expert systems" of the past. The journey from the symbolic AI of the 1950s to today's learning models is what makes these autonomous agents possible.

The Core Components of an AI Agent

Building an agent means assembling a few key technological pieces. Each part has a distinct job, and together they enable the agent to perceive its environment, think through a problem, and take action.

The Large Language Model (LLM) is the brain of the whole operation, providing the core reasoning and language-processing power. But an LLM on its own is just a sophisticated text generator. To become a true agent, it needs a supporting cast.

An AI agent acts like a skilled coordinator, orchestrating multiple capabilities while maintaining a holistic understanding of the task. It can make informed decisions about what to do next based on what it learns along the way, much like a human expert would.

This coordination is where specific frameworks and tools come into play. Here's a quick rundown of the essential building blocks you'll be working with.

This table breaks down the core pieces that form the foundation of any modern AI agent.

Key AI Agent Components at a Glance

| Component | Primary Function | Example Technology |

|---|---|---|

| Large Language Model (LLM) | Provides core reasoning, planning, and language capabilities. | GPT-4o, Claude 3, Llama 3 |

| Agentic Framework | Orchestrates the agent's logic, state, and tool usage. | LangChain, LlamaIndex, Autogen |

| Vector Database | Acts as the agent's long-term memory, storing and retrieving information. | Pinecone, Weaviate, Chroma |

| Tools/APIs | Gives the agent the ability to act and interact with the outside world. | Your SaaS API, Google Search API |

Understanding how these components interact is key to building an agent that doesn't just talk, but does.

Connecting Concepts to Business Value

When you assemble these pieces correctly, you start to unlock real, tangible business value.

Imagine an agent built for a project management SaaS. A user might ask, "Summarize the progress on the Q3 marketing campaign."

The agentic framework would then direct the LLM to use a specific tool—in this case, your company's internal API—to pull all the relevant project data. The LLM then processes that raw data, formulates a clear and concise summary, and delivers it to the user. This simple interaction is a perfect example of how to explore what AI is in business to solve practical, everyday problems.

Designing a Resilient AI Agent Architecture

Let's be blunt: a fragile AI agent is a liability. For any B2B or SaaS product, an agent that crumbles under unexpected user input or can't handle a growing user base isn't just a technical glitch. It's a direct hit to your reputation and your customers' trust. This is exactly why a thoughtful architecture is your most valuable asset. It’s what turns a clever prototype into a robust, production-ready system.

The mission is to build an agent that's both smart and sturdy. It needs a solid blueprint that can handle real-world complexity, behave predictably, and scale right alongside your business. This means we have to move past simple, linear workflows and embrace architectural patterns built for the messy reality of user interactions.

Choosing Your Architectural Pattern

Your first big decision is picking the core logic that will drive your agent’s behavior. A pattern I’ve seen work incredibly well in practice is ReAct (Reason + Act). This framework essentially teaches the agent to "think out loud" by generating a reasoning trace before it takes an action. This simple but powerful approach makes the agent’s decision-making process far more transparent and way easier to debug when things go wrong.

Imagine an AI agent built to help with customer onboarding. When a new user asks, "How do I invite my team?" a ReAct-based agent would follow a logical sequence:

- Thought: "Okay, the user wants to invite their team. I need to find the official procedure in our knowledge base."

- Act: Use the

search_docstool with the query "team invitation process." - Observation: The tool pulls back the step-by-step instructions.

- Thought: "Great, I've got the instructions. Now I'll format them into a clear, easy-to-read response."

- Act: Present the formatted steps to the user.

This pattern gives you a clear, auditable trail of the agent's logic. That's invaluable for troubleshooting and making improvements. It helps turn the AI "black box" into something you can actually understand and manage.

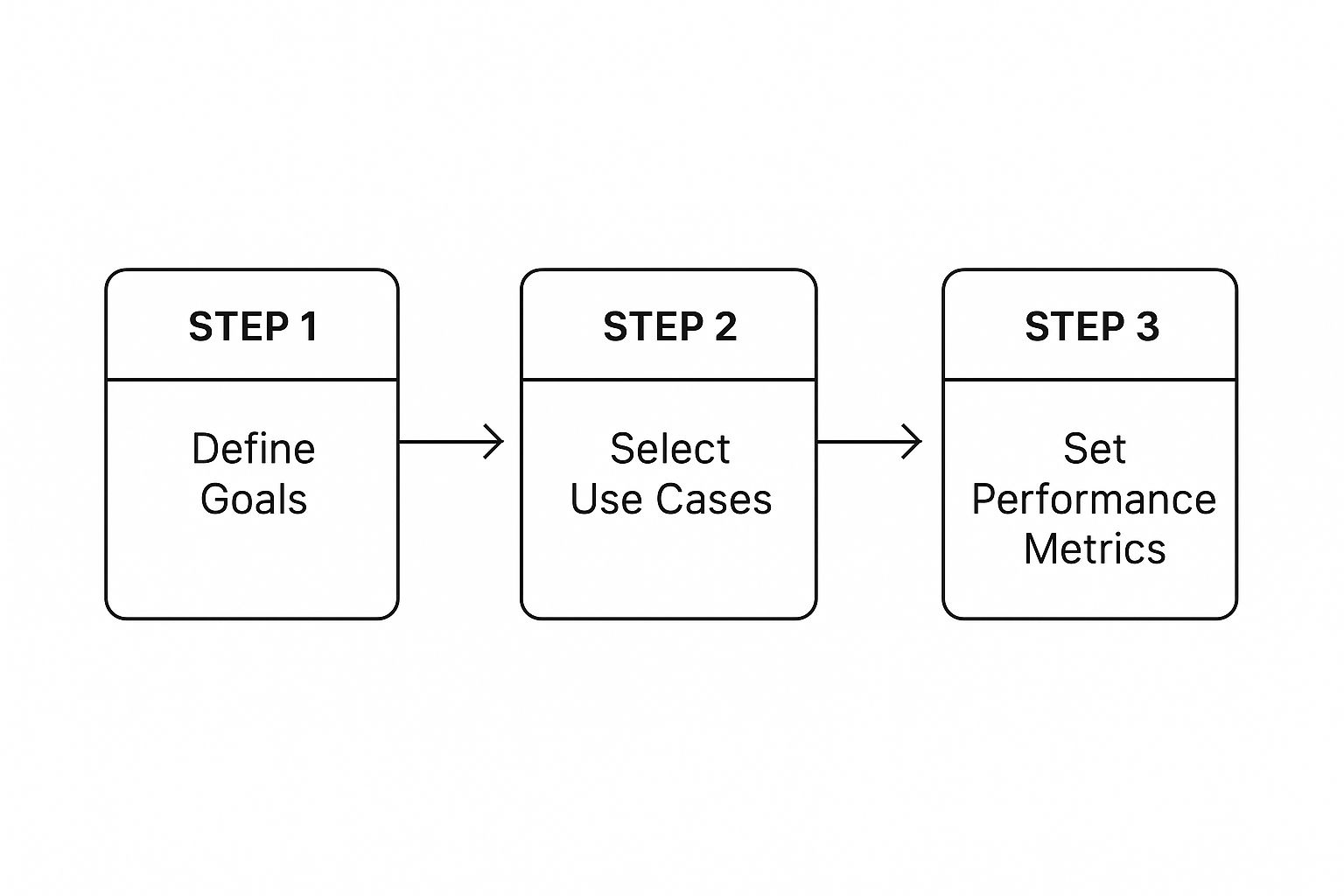

As you can see, a successful AI agent starts with clear business goals, not just a pile of tech.

Structuring Your Core Components

Once you have a framework like ReAct guiding your agent, it's time to build out the key components. A truly resilient architecture is modular, meaning you can swap out or upgrade individual parts without having to tear down and rebuild the entire system.

The LLM Brain

Picking your Large Language Model is a critical balancing act. Sure, powerhouse models like GPT-4o offer incredible reasoning, but they come with a hefty price tag and higher latency. I've found that for many B2B tasks, a smaller, faster model like GPT-4o-mini or a solid open-source alternative can deliver 80% of the performance at 20% of the cost. My advice? Always start with a cost-effective model for development and only upgrade if you hit a wall and genuinely need more power.

The best LLM isn't always the biggest or most expensive. It's the one that delivers the required performance for your specific use case with the best balance of speed, cost, and accuracy.

The Memory System

An agent without memory is an agent with amnesia. It can't hold a coherent conversation, and that's a deal-breaker. Your vector database is the foundation of this memory, storing everything from conversation history to user preferences as numerical representations (embeddings).

When you're designing the memory, think in layers:

- Short-Term Memory: This is a simple buffer holding the last few back-and-forths. It’s lightning-fast and essential for maintaining immediate context.

- Long-Term Memory: This is your vector database, where you store summarized conversations and key facts that can be retrieved with semantic search.

This layered approach gives the agent quick access to what was just said, while also letting it pull from a deep well of long-term knowledge when needed.

Designing a Modular Tool System

The real magic of an AI agent comes from its ability to interact with the world through tools. A "tool" is just a function or an API call the agent can trigger to get information or perform an action. For our customer onboarding agent, tools might include create_user_workspace, send_welcome_email, or schedule_demo_call.

You absolutely want to design your tool system for flexibility. Avoid the temptation to hardcode tool logic directly into the agent's main loop. Instead, create a registry of available tools that the agent can consult. Each tool should have a clear name and a descriptive docstring explaining exactly what it does, what inputs it needs, and what it returns.

This modularity is a lifesaver. It means you can add, remove, or update tools without messing with the agent's core reasoning engine. Launch a new product feature? Just add a new tool for it, and the agent instantly gets a new superpower. This is how you build an AI agent that can actually evolve with your product.

Selecting Your Modern AI Agent Tech Stack

Picking the right technologies to build your AI agent can feel overwhelming. You're faced with a sea of frameworks, models, and databases, and it's easy to get lost. The key isn't to find the single "best" tool, but to assemble the right stack—one that matches your team's expertise, your project's scope, and your budget.

This is a relatively new problem. The field exploded after 2015 with major breakthroughs in deep reinforcement learning. That was the year Google DeepMind's DQN crushed classic Atari games and OpenAI was founded. Fast forward to 2020, and models like GPT-3 showed they could generate text that was startlingly human, paving the way for the agents we're building today. If you're interested in the backstory, this AI timeline is a great read.

Thanks to that rapid progress, we have incredible tools at our fingertips. Let's break down how to choose them.

Choosing Your Agent Development Framework

The framework is the central nervous system of your agent. It’s what connects the LLM brain to its tools and memory, so this is a decision you want to get right from the start. Three names dominate the conversation, and each has its own philosophy.

- LangChain: This is the old guard—the most mature and feature-packed framework out there. Think of it as a massive toolbox with an integration for just about everything. It’s a great fit for complex, multi-step workflows where you need a ton of flexibility.

- LlamaIndex: While it can build agents, LlamaIndex truly shines at one thing: Retrieval-Augmented Generation (RAG). If your agent’s main job is to pull information from and reason over a large body of your own documents, LlamaIndex was built from the ground up for that exact purpose.

- Autogen: A fascinating framework from Microsoft Research, Autogen is all about creating multi-agent systems. Instead of building one monolithic agent, you can design a team of specialized agents that collaborate on a problem. It’s perfect for tackling really complex problem-solving or simulation tasks.

To make the choice clearer, here’s a quick breakdown of how these popular frameworks stack up against each other.

Comparison of AI Agent Development Frameworks

| Framework | Best For | Key Feature | Learning Curve |

|---|---|---|---|

| LangChain | Complex, custom agentic workflows | Vast library of integrations | Moderate to High |

| LlamaIndex | Data-intensive RAG applications | Advanced data ingestion and indexing | Moderate |

| Autogen | Multi-agent collaboration | Orchestrating multiple specialized agents | High |

For most SaaS projects, I find that LangChain is the most versatile starting point. But again, if your agent is all about RAG, LlamaIndex will probably get you there faster.

The Great Debate: LLM Providers vs. Open-Source

The Large Language Model (LLM) you choose is the engine of your agent. It directly dictates performance, cost, and latency. Your first big decision here is whether to use a proprietary model through an API or go down the route of hosting an open-source model yourself.

Proprietary Models (OpenAI, Anthropic, Google):

This is the fastest way to get started. You get top-tier performance without any of the infrastructure headaches. The trade-offs are pretty clear: costs scale directly with usage, and you're sending your data to a third party, which can be a non-starter for some companies.

Open-Source Models (Llama 3, Mistral):

Running your own model gives you total control over your data and can be cheaper at massive scale. But don't underestimate the lift—it demands serious technical know-how for managing GPU infrastructure, fine-tuning, and keeping everything running smoothly. For most B2B companies, that operational overhead is a huge deterrent.

My advice from experience? Start with a proprietary model like GPT-4o-mini or Claude 3 Haiku. They hit a sweet spot of intelligence, speed, and cost-effectiveness. You can always architect your agent to be model-agnostic, letting you swap in a different LLM later if your needs change.

Assembling The Supporting Cast

An agent isn't just a framework and an LLM. You'll need a few other key technologies to handle things like memory and debugging.

- Vector Databases: To give your agent a memory that persists, you’ll need a vector database. Tools like Pinecone or Weaviate store information as numerical representations (embeddings), which allows the agent to quickly find and recall relevant context from past conversations or documents.

- Observability Tools: Figuring out why an agent did something can feel like black magic. Observability platforms like Langfuse or Arize AI give you a window into the agent’s thought process. You can trace its steps, spot errors, and understand its decision-making.

- Workflow Orchestration: Tying all these different systems together cleanly is a job in itself. It's worth looking into various AI workflow automation tools to see how you can connect the dots and build a truly cohesive system.

By being deliberate about each piece of your tech stack, you're setting the foundation for an AI agent that’s not just powerful on day one, but also scalable and maintainable for the long haul.

The Hands-On Build and Integration Process

Alright, the architecture is mapped out and your tech stack is chosen. Now for the fun part: turning that blueprint into something real. This is where code meets concept, and you actually start to build an AI agent that can think, act, and plug into your products. Moving from a static plan to a working prototype involves a clean setup, some smart coding, and a serious eye on security.

First things first, let's set up a clean, isolated development environment. I can't stress this enough. It’s not just a formality; it’s what keeps dependency nightmares at bay and ensures the agent you build on your machine doesn't throw a fit when you deploy it.

Setting Up Your Development Workspace

Before you even think about writing the agent's logic, get your project folder and a virtual environment set up. This is a non-negotiable habit that will save you from countless hours of debugging down the line.

For a standard Python project, it’s pretty straightforward:

- Make a new project folder and jump into it.

- Spin up a virtual environment. This walls off your project's dependencies from your main Python installation.

- Activate it. This ensures any packages you install are specific to this project.

- Install your core libraries. Pull in things like

langchain,langchain-openai, and any other dependencies your stack requires.

Next, let's talk API keys. Never, ever hardcode them directly in your code. Please. Use a .env file to store secrets like your OpenAI key and load them as environment variables. It’s a basic security practice that also makes switching between dev and production keys a breeze.

Defining the Agent's Core Logic

With the workspace ready, it's time to build the agent's brain—its reasoning loop and memory. This is what brings it to life, teaching it how to hold a thought and make a decision.

A solid approach is to create a "state" object. Think of this as the agent's short-term memory. It can be a simple dictionary or a class that keeps track of the conversation, information it has extracted, and what it needs to do next.

The agent's state isn't just a bucket for data; it's a living snapshot of its "mind." It mirrors how we think—holding the original query, figuring out the user's intent, and noting key details to build a complete picture.

This state gets passed through a series of nodes or functions, where each one handles a specific job. For instance, one node might classify what the user wants, another could pull out key details from their message, and a third could summarize the conversation so far. By chaining these nodes together, you create a workflow that guides the agent from input to intelligent output.

Granting Access to Tools and APIs

An agent is only as good as the actions it can take. Its real power comes from its ability to interact with the world outside its own code. We do this by giving it "tools"—basically, functions that let it do things like search a database, call an external API, or, most importantly, talk to your own SaaS product's backend.

When you build an AI agent for a B2B app, this integration is everything.

- Define Clear Functions: Each tool needs to be a well-defined function. Give it a descriptive name and a clear docstring explaining what it does, what inputs it needs, and what it returns. The LLM relies on this to figure out when and how to use the tool.

- Handle Authentication Securely: When the agent needs to hit your product's API, it has to be secure. Use API tokens or OAuth, and make sure the agent only has the permissions it absolutely needs to do its job. Nothing more.

- Parse Outputs Reliably: LLMs can be a bit… creative with their outputs. You need robust parsing logic to handle whatever the tool spits back—including errors or weirdly formatted data—before you feed it back into the agent's reasoning loop.

Getting your agent connected to backend services is the whole game. This requires a solid grasp of how systems should talk to each other. For a deeper dive, reading up on data integration best practices will help ensure your connections are both secure and efficient.

By structuring your tools this way, you end up with a modular, extensible system. As your product grows, you can easily add new tools to expand what your agent can do without having to rip apart its core logic. It grows right alongside your platform, taking you one giant step closer to a production-ready agent.

Taking Your AI Agent from the Lab to the Real World

Getting your AI agent working on your local machine is one thing. Launching it for real users? That’s a whole different ball game. The controlled, predictable environment of development is nothing like the messy, unpredictable nature of live customer interactions. This is where the real work begins—the rigorous testing, smart deployment, and relentless monitoring that turns a cool prototype into a rock-solid business tool.

Forget everything you know about traditional software testing. You can't just write a unit test to see if a function returns true. AI agents are, by their nature, non-deterministic. You're not testing for simple pass/fail; you're evaluating behavior, resilience, and the quality of the outcomes. It requires a complete shift in mindset.

How to Actually Measure if Your Agent is Any Good

Your main objective here is to figure out if the agent can do its job in the wild. This means looking past simple accuracy scores and digging into metrics that tell the real story of its performance.

We have to get more sophisticated with our evaluation. Here’s what I focus on:

- Task Completion Rate: It’s the most basic question: does it work? If a user asks the agent to "find the three most recent invoices for customer X and email them to their accountant," does it actually happen?

- Hallucination Detection: This is a big one. How often does your agent just make things up? Fabricating information is a massive trust-killer, and you need to track this religiously.

- Tool Usage Accuracy: When the agent needs to call an API or use a specific tool, does it pick the right one? More importantly, does it feed it the right data? Garbage in, garbage out.

- Handling the Weird Stuff: What happens when a user types in something vague, irrelevant, or even tries to trick it? A good agent should handle these edge cases gracefully, maybe by asking for clarification, instead of crashing or spewing nonsense.

An AI agent’s true value isn't just in what it does when everything goes perfectly. It’s about how it behaves when things inevitably go wrong. I’d rather have an agent that fails predictably than one that succeeds randomly.

Building a Modern Deployment Pipeline

Once you’re feeling good about the agent's performance in testing, it's time to get it out the door. A solid CI/CD (Continuous Integration/Continuous Deployment) pipeline is non-negotiable. It’s what lets you move from your laptop to production smoothly and safely. As you get into this stage, having a good grasp of modern development operations is a game-changer. It’s also incredibly helpful to have your team structured effectively; there are some excellent guides on mastering DevOps team roles that can get you started.

For an AI agent, your pipeline needs a few special ingredients. On top of your usual unit and integration tests, you absolutely must automate your evaluation suite. This means every single time a developer pushes a change, your full battery of performance tests runs automatically. Think of it as your safety net—it prevents a small code tweak from accidentally lobotomizing your agent's reasoning ability.

When it's go-time, please, don't just flip a switch for all your users. A phased rollout is the only sane way to do this and minimize risk.

- Canary Release: Start by deploying the new version to a tiny slice of your user base, maybe just 5%. Watch it like a hawk. If everything looks stable and performance is good, you can slowly open the floodgates.

- A/B Testing: A more scientific approach is to run the new agent alongside the old one. You serve different versions to different users and compare the metrics head-to-head. This gives you cold, hard data on whether your changes actually made things better.

What to Do After You Go Live

Deployment isn't the end. It's the beginning of a never-ending feedback loop. Once your agent is interacting with real users, you need to become obsessed with monitoring its performance and listening to what people are saying.

You’ll want to track the technical stuff—API latency, error rates, and especially your token consumption costs. But the real gold is in the user feedback. Add simple thumbs-up/thumbs-down buttons to every interaction. Create an easy way for users to report when things go sideways. This qualitative feedback tells you not just what the agent did, but how the user felt about it.

This tight cycle of deploying, monitoring, and iterating is how you evolve. It's how you build an AI agent that doesn't just work on launch day but actually gets smarter, more reliable, and more valuable over time.

Common Questions About Building an AI Agent

Even with a solid plan, building an AI agent for the first time brings up a ton of questions. This space is moving so fast that what was true six months ago might not be today. For B2B and SaaS companies, the practical side of things—cost, security, and just making it work—is always front and center.

Let's get into some of the questions I hear most often.

How Much Does It Cost to Build and Run an AI Agent?

This is always the first question, and the honest answer is: it really depends. The initial development is one thing, but the real cost you need to watch is the ongoing operational expense. Most of that comes from the LLM API calls your agent makes every single day.

A simple proof-of-concept, maybe using a cheaper model to test an idea, might only set you back a few bucks a month. But once you scale up to a production agent handling thousands of customer interactions inside your SaaS product, those API bills can climb fast. The trick is to be smart about it from the get-go.

Here’s how we keep those costs from spiraling:

- Start with the "good enough" models. Don't jump straight to the most expensive, powerful LLM. For most development and testing, models like GPT-4o-mini or Claude 3 Haiku are perfectly capable and way cheaper. You can always upgrade later if you hit a wall.

- Cache everything you can. If users are asking the same questions over and over, there's no reason to call the LLM every single time. Cache those common responses and serve them directly. It’s a huge cost-saver.

- Set hard limits. You need guardrails. Implement usage caps and alerts to keep a close eye on token consumption. That way, a sudden spike in activity won't lead to a nasty surprise on your monthly bill.

How Do I Keep My AI Agent Secure?

You can't treat security as a feature you add at the end. It has to be baked in from day one. A poorly secured agent is a massive liability, potentially exposing sensitive company or customer data. The biggest weak points are almost always how the agent handles user input and connects to your other systems.

First rule: never, ever hardcode API keys or credentials in your code. Use a proper secrets manager or environment variables. Just as important is cleaning up user inputs to defend against prompt injection attacks. This is where someone tries to sneak in commands to make your agent do things it shouldn't.

When you give an agent access to your internal APIs, live by the principle of least privilege. Grant it the absolute bare minimum permissions it needs to do its job, and nothing more. This single practice dramatically shrinks your attack surface.

What Is the Biggest Challenge in Production?

From my experience, getting a prototype working isn't the hard part. The real challenge is making the agent reliable and predictable at scale. These models aren't deterministic; they can give you a slightly different answer every time, even with the same input. That's a scary thought when paying customers are depending on it.

To get past this, you need to think beyond simple unit tests. A robust evaluation framework that constantly checks if the agent is completing its tasks correctly and flags hallucinations is non-negotiable. You also need rock-solid error handling and fallback logic. What happens when the agent gets confused? It’s far better for it to say, "I don't know," than to confidently make something up.

Can I Build an AI Agent Without an ML Background?

Yes. 100%. The game has completely changed. Modern frameworks like LangChain handle all the heavy machine learning complexity for you. If you're a developer who can work with APIs and understands basic software architecture, you're already most of the way there.

The work has shifted from training models to orchestrating them. Your job is to be the glue, connecting the LLM to your data, your tools, and a memory system. While a deep understanding of ML is definitely a plus for fine-tuning and squeezing out every last drop of performance, it's absolutely not a prerequisite to get started anymore.

At MakeAutomation, we help B2B and SaaS businesses build and deploy powerful AI solutions that actually work. If you're ready to create an AI agent that delivers real value, let's connect. Explore our tailored frameworks at MakeAutomation.